▶ Artificial Intelligence Regains Its Allure

Post-human evolution may soon pass frvom the realm of science fiction, like “The Terminator,” into reality.

By JOHN MARKOFF MOUNTAIN VIEW, CaliforniaTHE NOTION THAT a self-aware computing system would emerge spontaneously from the interconnections of billions of computers and computer networks goes back in science fiction at least as far as Arthur C. Clarke’s “Dial F for Frankenstein.” A prescient short story that appeared in 1961, it foretold an ever-more-interconnected telephone network that spontaneously acts like a newborn baby and leads to global chaos as it takes over financial, transportation and military systems.

Today, artificial intelligence, once the preserve of science fiction writers and eccentric computer prodigies, is back in fashion and getting serious attention from NASA and from Silicon Valley companies like Google as well as a new round of start-ups that are designing everything from next-generation search engines to machines that listen or that are capable of walking around in the world. Artificial intelligence’s new respectability is turning the spotlight back on the question of where the technology might be heading and, more ominously, perhaps, whether computer intelligence will surpass our own, and how quickly.

The concept of ultrasmart computers - machines with “greater than human intelligence” - was dubbed “The Singularity” in a 1993 paper by the computer scientist and science fiction writer Vernor Vinge. He argued that the acceleration of technological progress had led to “the edge of change comparable to the rise of human life on Earth.” This thesis has long struck a chord here in Silicon Valley.

Artificial intelligence is already used to automate and replace some human functions with computer-driven machines. These machines can see and hear, respond to questions, learn, draw inferences and solve problems. But for the Singulatarians, artificial intelligence refers to machines that will be both self-aware and superhuman in their intelligence, and capable of designing better computers and robots faster than humans can today.

Such a shift, they say, would lead to a vast acceleration in technological improvements of all kinds.

The idea is not just the province of science fiction authors; a generation of computer hackers, engineers and programmers have come to believe deeply in the idea of exponential technological change as explained by Gordon Moore, a co-founder of the chip maker Intel.

In 1965, Dr. Moore first described the repeated doubling of the number of transistors on silicon chips with each new technology generation, which led to an acceleration in the power of computing. Since then “Moore’s Law” - which is not a law of physics, but rather a description of the rate of industrial change - has come to personify an industry that lives on Internet time, where the Next Big Thing is always just around the corner.

Several years ago Raymond Kurzweil, an artificial-intelligence pioneer, took the idea one step further in his 2005 book, “The Singularity Is Near: When Humans Transcend Biology.” He sought to expand Moore’s Law to encompass more than just processing power and to simultaneously predict with great precision the arrival of post-human evolution, which he said would occur in 2045.

In Dr. Kurzweil’s telling, rapidly increasing computing power in concert with cyborg humans would then reach a point when machine intelligence not only surpassed human intelligence but took over the process of technological invention, with unpredictable consequences.

Ken MacLeod, a science fiction writer described the idea of the singularity as “the Rapture of the nerds.” Kevin Kelly, an editor at Wired magazine, notes, “People who predict a very utopian future always predict that it is going to happen before they die.”

However, Mr. Kelly himself has not refrained from speculating on where communications and computing technology is heading. He is at work on his own book, “The Technium,” forecasting the emergence of a global brain - the idea that the planet’s interconnected computers might someday act in a coordinated fashion and perhaps exhibit intelligence. He just isn’t certain about how soon an intelligent global brain will arrive.

Others who have observed the increasing power of computing technology are even less sanguine about the future outcome. William Joy, a computer designer and venture capitalist, for example, wrote a pessimistic essay in Wired in 2000 that argued that humans are more likely to destroy themselves with their technology than create a utopia. Mr. Joy, a co-founder of Sun Microsystems, still believes that. “I wasn’t saying we would be supplanted by something,” he said. “I think a catastrophe is more likely.”

스마터리빙

more [ 건강]

[ 건강]이제 혈관 건강도 챙기자!

[현대해운]우리 눈에 보이지 않기 때문에 혈관 건강을 챙기는 것은 결코 쉽지 않은데요. 여러분은 혈관 건강을 유지하기 위해 어떤 노력을 하시나요?

[ 건강]

[ 건강]내 몸이 건강해지는 과일궁합

[ 라이프]

[ 라이프]벌레야 물럿거라! 천연 해충제 만들기

[ 건강]

[ 건강]혈압 낮추는데 좋은 식품

[현대해운]혈관 건강은 주로 노화가 진행되면서 지켜야 할 문제라고 인식되어 왔습니다. 최근 생활 패턴과 식생활의 변화로 혈관의 노화 진행이 빨라지고

사람·사람들

more

‘러브인뮤직’ 2025 성탄 음악회 성료

러브인뮤직은 지난 20일 LA 동양선교교회(담임 김지훈 목사)에서 가진 2025 성탄 작은 음악회를 끝으로 각 지역 봉사처별 올해 활동을 마무…

한인 향군 단체들 ‘진짜사나이’ 송년 행사

6·25 참전유공자회(회장 이재학)와 육군협회(회장 최만규)가 공동 주최한 2025 ‘진짜사나이’ 송년모임이 지난 19일 LA 용궁식당에서 한…

“취미생활로 다진 친목… 선후배들과 만든 모교사랑…

사진러브한인 사진 동호회 사진러브(회장 크리스 고)는 13일 용수산에서 송년모임을 갖고 한 해를 마무리하는 뜻깊은 시간을 가졌다. 이날 모임에…

[홀인원] 이상원 박사

일반외과 전문의 이상원(왼쪽) 박사가 지난 9일 뉴포트비치 소재 골프장 9번 홀(152야드)에서 레스큐 클럽으로 친 샷이 그대로 홀에 빨려 들…

[송년행사 게시판] 재미시인협회

재미시인협회(회장 지성심)는 오는 20일 오후 4시 가든스윗호텔에서 한 해를 마무리하며 동인지 ‘외지’ 제35집 출판 기념회와 ‘제23회 재미…

많이 본 기사

- ‘황금함대 구상’ 트럼프 “한화와 새 프리깃함 사업”…마스가 탄력

- “트럼프에 기부하고 공직발탁·사면·사업혜택”…이해충돌 논란

- ‘평양 무인기 침투 혐의’ 尹 구속 … 1

- ICE 홈디포 급습단속에 한인 체포 4

- 파라마운트, 워너 인수전 총력… “CEO 부친 엘리슨이 보증”

- 뷔 ‘Winter Ahead’유튜브 뮤직 홀리데이 명곡 명예의전당 후보에..머라이어 캐리와 함께 올라

- 내란재판부法, 본회의서 與주도 처리전망…정통망법 뒤이어 상정

- 연말 ‘로드레이지’ 비극… 한인 총격 피살

- 한인 박찬영씨 교통시비 총격 사망...타코마중앙장로교회 40대 장로, 19일 레이시 도로서 참변

- “엔비디아, 내년 2월 H200 中수출…美의원, 허가 공개 요구”

- “AI 아님” 이병헌♥이민정, 부부가 나란히.. ‘미담’ 터졌다

- 美, 외국산 드론·부품 수입 금지…중국 DJI 등 전면 차단

- 이번엔 배터리부품·변압기?…美 철강·알루미늄 관세 확대 우려

- 트럼프, 글로벌 제약사 약값 인하 발표

- 성탄 연휴 폭우 ‘비상’ 주중 최대 6인치 온다

- ‘마침내’ SD 송성문 초대박! ‘3+1+1년 2100만$’ 잭폿 터졌다... 특별한 인센티브 계약까지 공개

- 240만달러 투자사기로 호화생활 탕진...전 이스트사이드 부동산 에이전트 투자사기 유죄 평결

- ‘결혼’ 신민아♥김우빈, 영화 한 장면 같네..본식 사진 공개

- 희대의 박나래 사태, 쌍방 고소전으로..법적 절차 본격화

- 운전중 시비 끝에 총 맞아 40대한인 사망

- “미 주류 경제계와 활발한 교류 펼칠 터”

- 구글 알파벳 “데이터센터 확장”…인터섹트 47억5천만달러에 인수

- CBS, ‘이민자 추방’ 관련 보도 취소… ‘트럼프 눈치보기’ 비판

- 기안84, 알고 보니 엘리트 집안? “외할아버지= 부산대 법대 출신”

- ‘거액 탈세·통관 사기’ 한인 통관브로커 중형

- 트럼프 친구 사업가의 골프장, 세계 분쟁종식협상 무대돼

- 박보검 미담 또 추가..이연복도 인정한 ‘다 가진 남자’

- 트럼프, 그린란드 특사 임명…덴마크, “영토 존중” 반발

- 말살되고 있는 유럽의 성탄절 전통, … 1

- 시택공항 연말 할리데이시즌 이용객 3% 늘듯...12월 18일부터 1월 4일까지 250만명 예상…여름 성수기보다는 한산

- 애난데일 인근 I-495에서 28중 추돌사고, 8명 부상

- 미주한인 이산가족 북한 고향 길 열려

- 빅애플 2025년 송년회

- 미 자동차 업계… 전기차 접고 내연차로 회귀

- 맥도널드 주차장서 싸움 끝 총격

- 관세로 준다던 미군 보너스 알고보니 ‘주택보조금’ 예산

- “동해(East Sea), 기억하고 함께 부르자”...페더럴웨이 통합한국학교, 시애틀총영사관과 ‘동해병기 캠페인’

- 트럼프, 전 정부 임명 대사급 외교관 30여명 소환

- 로드아일랜드 디자인 학교 전액 장학생 합격

- ‘경악’ 오타니 골드카드 300만 달러에 낙찰! 슈퍼스타 위엄, KBO 최고연봉도 넘었다

- “남의 묘비에 이름 새기는 것 같다”

- 우리어덜트데이케어, 연말파티

- ‘장기 부상’ 김민재마저 쓰러졌다, 이미 이강인도 ‘최소 몇 주’ 결장 불가피... 韓 국가대표 연속 비보

- 올해 메릴랜드 교도소 수감자 약 70명 숨져

- 경찰, ‘여론조사비 대납의혹’ 이준석 무혐의… “추측성 진술뿐”

- 외로운 이웃들

- 트럼프행정부, 동부해안 5개 대형해상풍력단지에 해역임대 중단

- 트럼프, 건강보험사들에 “보험료 내려… 1

- 냉장고 문짝 1초 만에 조립… ‘다크 팩토리’ 성큼

- 판례 잘못 인용해 머스크 손들어준 판사… “AI 쓰다 오류” 의혹

1/5지식톡

-

미 육군 사관학교 West Poin…

0

미 육군 사관학교 West Poin…

0https://youtu.be/SxD8cEhNV6Q연락처:wpkapca@gmail.comJohn Choi: 714-716-6414West Point 합격증을 받으셨나요?미 육군사관학교 West Point 학부모 모…

-

☝️해외에서도 가능한 한국어 선생님…

0

☝️해외에서도 가능한 한국어 선생님…

0이 영상 하나면 충분합니다!♥️상담신청문의♥️☝️ 문의 폭주로 '선착순 상담'만 진행합니다.☎️ : 02-6213-9094✨카카오톡ID : @GOODEDU77 (@골뱅이 꼭 붙여주셔야합니다…

-

테슬라 자동차 시트커버 장착

0

테슬라 자동차 시트커버 장착

0테슬라 시트커버, 사놓고 아직 못 씌우셨죠?장착이 생각보다 쉽지 않습니다.20년 경력 전문가에게 맡기세요 — 깔끔하고 딱 맞게 장착해드립니다!장착비용:앞좌석: $40뒷좌석: $60앞·뒷좌석 …

-

식당용 부탄가스

0

식당용 부탄가스

0식당용 부탄가스 홀세일 합니다 로스앤젤레스 다운타운 픽업 가능 안녕 하세요?강아지 & 고양이 모든 애완동물 / 반려동물 식품 & 모든 애완동물/반려동물 관련 제품들 전문적으로 홀세일/취급하는 회사 입니다 100% …

-

ACSL 국제 컴퓨터 과학 대회, …

0

ACSL 국제 컴퓨터 과학 대회, …

0웹사이트 : www.eduspot.co.kr 카카오톡 상담하기 : https://pf.kakao.com/_BEQWxb블로그 : https://blog.naver.com/eduspotmain안녕하세요, 에듀스팟입니다…

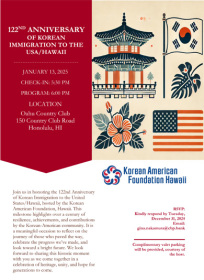

케이타운 1번가

오피니언

옥세철 논설위원

옥세철 논설위원말살되고 있는 유럽의 성탄절 전통, 그 원인은…

외로운 이웃들

조지 F·윌 워싱턴포스트 칼럼니스트

조지 F·윌 워싱턴포스트 칼럼니스트 [조지 F. 윌 칼럼] MIT에 대한 트럼프의 무분별한 공격

전지은 수필가

전지은 수필가 ‘소명’이라 알아듣고

최문선 / 한국일보 논설위원

최문선 / 한국일보 논설위원[지평선] 쿠팡의 “한국말 몰라요”

잇단 총격·테러 음모… 한인타운 안전 우려

2025년, 성찰과 감사의 마무리를

김인자 시인ㆍ수필가

김인자 시인ㆍ수필가 [금요단상] AI와 동거, 그 실체

한영일 / 서울경제 논설위원

한영일 / 서울경제 논설위원[만화경] 웰다잉 인센티브

1/3지사별 뉴스

빅애플 2025년 송년회

빅애플(대표 여주영)은 지난 19일 퀸즈 베이사이드 소재 산수갑산2 연회장에서 2025년 송년회를 열고 회원들간 화합과 친목을 도모했다. 이날…

재미 한인이산가족 상봉 길 열렸다

미주한인 이산가족 북한 고향 길 열려

“그리워하면 언젠가 만나게 되는…” 노래 가사처럼 그리워하면 다시 만날 수 있을까. 재미한인이산가족들은 그렇게 그리워하며 반세기가 넘게 기다리…

워싱턴 메트로지역 5세이하 아동인구 20년새 1.8%⇩

연말 ‘로드레이지’ 비극… 한인 총격 피살

연말을 맞아 도로 위에서 순간적으로 벌어진 운전 중 시비가 40대 한인 가장의 총격 피살 비극으로 이어졌다. 워싱턴주 레이시 경찰국과 서스턴 …

엡스타인 파일 공개 다음날 트럼프 사진 삭제…야당서 탄핵 경고

오늘 하루 이 창 열지 않음 닫기

.png)

댓글 안에 당신의 성숙함도 담아 주세요.

'오늘의 한마디'는 기사에 대하여 자신의 생각을 말하고 남의 생각을 들으며 서로 다양한 의견을 나누는 공간입니다. 그러나 간혹 불건전한 내용을 올리시는 분들이 계셔서 건전한 인터넷문화 정착을 위해 아래와 같은 운영원칙을 적용합니다.

자체 모니터링을 통해 아래에 해당하는 내용이 포함된 댓글이 발견되면 예고없이 삭제 조치를 하겠습니다.

불건전한 댓글을 올리거나, 이름에 비속어 및 상대방의 불쾌감을 주는 단어를 사용, 유명인 또는 특정 일반인을 사칭하는 경우 이용에 대한 차단 제재를 받을 수 있습니다. 차단될 경우, 일주일간 댓글을 달수 없게 됩니다.

명예훼손, 개인정보 유출, 욕설 등 법률에 위반되는 댓글은 관계 법령에 의거 민형사상 처벌을 받을 수 있으니 이용에 주의를 부탁드립니다.

Close

x