Roughly speaking, there are four steps to every decision. First, you perceive a situation. Then you think of possible courses of action. Then you calculate which course is in your best interest. Then you take the action.

Over the past few centuries, public policy analysts have assumed that step three is the most important. Economic models and entire social science disciplines are premised on the assumption that people are mostly engaged in rationally calculating and maximizing their self-interest.

But during this financial crisis, that way of thinking has failed spectacularly. As Alan Greenspan noted in his Congressional testimony last month, he was “shocked” that markets did not work as anticipated. “I made a mistake in presuming that the self-interests of organizations, specifically banks and others, were such as that they were best capable of protecting their own shareholders and their equity in the firms.”

So perhaps this will be the moment when we alter our view of decision-making. Perhaps this will be the moment when we shift our focus from step three, rational calculation, to step one, perception.

Perceiving a situation seems, at first glimpse, like a remarkably simple operation. You just look and see what’s around. But the operation that seems most simple is actually the most complex, it’s just that most of the action takes place below the level of awareness. Looking at and perceiving the world is an active process of meaning-making that shapes and biases the rest of the decision-making chain.

Economists and psychologists have been exploring our perceptual biases for four decades now, with the work of Amos Tversky and Daniel Kahneman, and also with work by people like Richard Thaler, Robert Shiller, John Bargh and Dan Ariely.

My sense is that this financial crisis is going to amount to a coming-out party for behavioral economists and others who are bringing sophisticated psychology to the realm of public policy. At least these folks have plausible explanations for why so many people could have been so gigantically wrong about the risks they were taking.

Nassim Nicholas Taleb has been deeply influenced by this stream of research. Taleb not only has an explanation for what’s happening, he even saw it coming. His popular books “Fooled by Randomness” and “The Black Swan” were broadsides at the risk-management models used in the financial world and beyond.

In “The Black Swan,” Taleb wrote, “The government-sponsored institution Fannie Mae, when I look at its risks, seems to be sitting on a barrel of dynamite, vulnerable to the slightest hiccup.” Globalization, he noted, “creates interlocking fragility.” He warned that while the growth of giant banks gave the appearance of stability, in reality, it raised the risk of a systemic collapse - “when one fails, they all fail.”

Taleb believes that our brains evolved to suit a world much simpler than the one we now face. His writing is idiosyncratic, but he does touch on many of the perceptual biases that distort our thinking: our tendency to see data that confirm our prejudices more vividly than data that contradict them; our tendency to overvalue recent events when anticipating future possibilities; our tendency to spin concurring facts into a single causal narrative; our tendency to applaud our own supposed skill in circumstances when we’ve actually benefited from dumb luck.

And looking at the financial crisis, it is easy to see dozens of errors of perception. Traders misperceived the possibility of rare events. They got caught in social contagions and reinforced each other’s risk assessments. They failed to perceive how tightly linked global networks could transform small events into big disasters.

Taleb is characteristically vituperative about the quantitative risk models, which try to model something that defies modelization. He subscribes to what he calls the tragic vision of humankind, which “believes in the existence of inherent limitations and flaws in the way we think and act and requires an acknowledgment of this fact as a basis for any individual and collective action.” If recent events don’t underline this worldview, nothing will.

If you start thinking about our faulty perceptions, the first thing you realize is that markets are not perfectly efficient, people are not always good guardians of their own self-interest and there might be limited circumstances when government could usefully slant the decisionmaking architecture (see “Nudge” by Thaler and Cass Sunstein for proposals). But the second thing you realize is that government officials are probably going to be even worse perceivers of reality than private business types. Their information feedback mechanism is more limited, and, being deeply politicized, they’re even more likely to filter inconvenient facts.

This meltdown is not just a financial event, but also a cultural one. It’s a big, whopping reminder that the human mind is continually trying to perceive things that aren’t true, and not perceiving them takes enormous effort.

스마터리빙

more [ 건강]

[ 건강]이제 혈관 건강도 챙기자!

[현대해운]우리 눈에 보이지 않기 때문에 혈관 건강을 챙기는 것은 결코 쉽지 않은데요. 여러분은 혈관 건강을 유지하기 위해 어떤 노력을 하시나요?

[ 건강]

[ 건강]내 몸이 건강해지는 과일궁합

[ 라이프]

[ 라이프]벌레야 물럿거라! 천연 해충제 만들기

[ 건강]

[ 건강]혈압 낮추는데 좋은 식품

[현대해운]혈관 건강은 주로 노화가 진행되면서 지켜야 할 문제라고 인식되어 왔습니다. 최근 생활 패턴과 식생활의 변화로 혈관의 노화 진행이 빨라지고

사람·사람들

more많이 본 기사

- ‘헉’ 오바마케어 보험료가 연 4만불… 1

- ‘남가주 사랑의 교회’ 이원준 담임목사 부임

- 가주서 노동법 위반 업주 처벌 대폭 … 1

- “ATM기 사용하기 겁나네”

- ‘K-도넛’, 남가주 진출·본격 확장

- 법무부, 엡스타인 자료 추가 공개… “전용기에 트럼프 8번 타”

- 이번엔 ‘먹는 비만약’ 경쟁…알약 위고비, 미국서 판매 승인

- 에어프랑스 엔진 화재 5천피트 급강하 ‘아찔’

- 엡스타인 파일서 지웠던 트럼프 사진 복원

- ICE 홈디포 급습 한인 체포

- 수학강사 맞아? 류시원♥ 19세 연하 아내, 민폐하객 미모 보니

- 美 경제 3분기 4.3% ‘깜짝 성장’…강한 소비가 성장견인

- 46회째 1등 안나온 파워볼 복권…당첨금 약 16억 달러로 껑충

- 중국 시온교회 목회자 체포… 미주 한인교계 등 ‘기도와 지원’

- 트럼프, 美 3분기 깜짝성장 “관세 덕” 주장하며 대법원 압박

- 올해 워싱턴DC 식당 92곳 폐업…‘역대 최다’

- 트럼프 “국가안보 위해 그린란드 필요…우리가 가져야”

- MD 로우스 직원, 지게차로 70대 노인 살해

- 법정구속 오열→불복..박수홍 친형 부부, 기어이 대법원으로

- 美서 돌아온 푸틴 특사…러, 우크라 평화안 대응 촉각

- 엔비디아칩 중국 밀반입?… “미국 정부, 싱가포르 업체 조사중”

- ‘거액 탈세·통관 사기’ 한인 통관브로커 중형

- ‘연방하원 도전장’ 척 박 예비후보 후원모임

- 美·인니, 관세협정 모든 쟁점에 합의…내년 1월 양국 정상 서명

- ‘뉴욕한인의 밤’ 일반한인 참여 문턱 낮췄다

- 4인 가족 보험료가 4만불까지… 중산층‘불안’

- “美국방부, 中격납고에 ICBM 100기이상 장전돼 있을것으로 판단”

- 악명 높은 갱단 연루 한인 기소

- 워싱턴 일원서 600만명 장거리 여행 떠난다

- 남가주 사랑의교회 노창수 목사 은퇴식

- 밀알·노숙자센터에 1,500달러씩 기부

- 2명에 모두 7,500달러 장학금

- 닻올린 내란전담재판부 안착할까…尹측은 “위헌심판 신청”

- CBS ‘트럼프 눈치보기’?… ‘이민자 추방’ 보도 취소

- ‘올해는 ICE 이민자 체포 광풍의 해’

- 21년간 장학금 총 60만 달러 지급

- 특검, “국정농단” 건진법사 징역 5년 구형…김건희는 증언 거부

- 팰팍 상공회의소, 고교생 2명에 장학금 전달

- LAPD “툭하면 발포”… 경관 총격 사건 올들어 급증

- SI 성인데이케어센터 ‘제5회 신인가수왕 선발대회’

- 한인 최초 NASA 우주비행사 조니 김 “우주서 김치·밥 그리웠다”

- 홍진영, 주사이모와 찍은 사진에 “12년 전 촬영, 친분 없다”

- 뉴저지한인고교생들, 양로원에 핸드 로션 기증

- ‘경찰 무력사용지침 갱신 의무화’ 입법 초읽기

- 소희 떠나고 선미..10년 전 원더걸스 ‘국민 걸그룹’ 시절 “보고 싶다”

- 가족 얼굴 못 알아보고 성격 변한 부모님… “서양 기준으론 정상?”

- 재외공관들 성탄연휴 휴무 ‘제각각’

- 베네수엘라 봉쇄에 금·은 값 또 최고

- 가주에서 2026년에 시행되는 새 노동법 법안들

- 국방부 인·태 차관보 한인 존 노 인준 통과

1/5지식톡

-

미 육군 사관학교 West Poin…

0

미 육군 사관학교 West Poin…

0https://youtu.be/SxD8cEhNV6Q연락처:wpkapca@gmail.comJohn Choi: 714-716-6414West Point 합격증을 받으셨나요?미 육군사관학교 West Point 학부모 모…

-

☝️해외에서도 가능한 한국어 선생님…

0

☝️해외에서도 가능한 한국어 선생님…

0이 영상 하나면 충분합니다!♥️상담신청문의♥️☝️ 문의 폭주로 '선착순 상담'만 진행합니다.☎️ : 02-6213-9094✨카카오톡ID : @GOODEDU77 (@골뱅이 꼭 붙여주셔야합니다…

-

테슬라 자동차 시트커버 장착

0

테슬라 자동차 시트커버 장착

0테슬라 시트커버, 사놓고 아직 못 씌우셨죠?장착이 생각보다 쉽지 않습니다.20년 경력 전문가에게 맡기세요 — 깔끔하고 딱 맞게 장착해드립니다!장착비용:앞좌석: $40뒷좌석: $60앞·뒷좌석 …

-

식당용 부탄가스

0

식당용 부탄가스

0식당용 부탄가스 홀세일 합니다 로스앤젤레스 다운타운 픽업 가능 안녕 하세요?강아지 & 고양이 모든 애완동물 / 반려동물 식품 & 모든 애완동물/반려동물 관련 제품들 전문적으로 홀세일/취급하는 회사 입니다 100% …

-

ACSL 국제 컴퓨터 과학 대회, …

0

ACSL 국제 컴퓨터 과학 대회, …

0웹사이트 : www.eduspot.co.kr 카카오톡 상담하기 : https://pf.kakao.com/_BEQWxb블로그 : https://blog.naver.com/eduspotmain안녕하세요, 에듀스팟입니다…

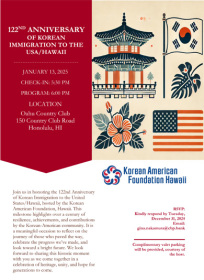

케이타운 1번가

오피니언

조환동 / 편집기획국장·경제부장

조환동 / 편집기획국장·경제부장 AI로 가속화되는 노동시장 개편

민경훈 논설위원

민경훈 논설위원‘크리스마스 캐롤’과 산타 클로스

정재민 KAIST 문술미래전략 대학원 교수

정재민 KAIST 문술미래전략 대학원 교수 [정재민의 미디어풍경] 적과의 동침, 협력하며 경쟁하기

김영화 수필가

김영화 수필가 [화요칼럼] 단호박의 온기

김정곤 / 서울경제 논설위원

김정곤 / 서울경제 논설위원[만화경] 안중근의사 유해봉환 사업

권지숙

권지숙 오후에 피다

옥세철 논설위원

옥세철 논설위원말살되고 있는 유럽의 성탄절 전통, 그 원인은…

외로운 이웃들

조지 F·윌 워싱턴포스트 칼럼니스트

조지 F·윌 워싱턴포스트 칼럼니스트 [조지 F. 윌 칼럼] MIT에 대한 트럼프의 무분별한 공격

1/3지사별 뉴스

‘연방하원 도전장’ 척 박 예비후보 후원모임

연방하원에 도전장을 낸 척 박(한국명 박영철) 예비후보 후원 모임이 지난 18일 열렸다. 척 박의 부친인 박윤용 뉴욕주하원 25선거구 (민주)…

‘경찰 무력사용지침 갱신 의무화’ 입법 초읽기

‘올해는 ICE 이민자 체포 광풍의 해’

올 한해동안 버지니아와 메릴랜드, DC 등에서 연방 이민당국에 체포된 사람이 1만명이 훌쩍 넘는 것으로 조사됐다. 또 미 전국적으로는 22만명…

“ATM기 사용하기 겁나네”

연말 ‘로드레이지’ 비극… 한인 총격 피살

연말을 맞아 도로 위에서 순간적으로 벌어진 운전 중 시비가 40대 한인 가장의 총격 피살 비극으로 이어졌다. 워싱턴주 레이시 경찰국과 서스턴 …

엡스타인 파일 공개 다음날 트럼프 사진 삭제…야당서 탄핵 경고

오늘 하루 이 창 열지 않음 닫기

.png)

댓글 안에 당신의 성숙함도 담아 주세요.

'오늘의 한마디'는 기사에 대하여 자신의 생각을 말하고 남의 생각을 들으며 서로 다양한 의견을 나누는 공간입니다. 그러나 간혹 불건전한 내용을 올리시는 분들이 계셔서 건전한 인터넷문화 정착을 위해 아래와 같은 운영원칙을 적용합니다.

자체 모니터링을 통해 아래에 해당하는 내용이 포함된 댓글이 발견되면 예고없이 삭제 조치를 하겠습니다.

불건전한 댓글을 올리거나, 이름에 비속어 및 상대방의 불쾌감을 주는 단어를 사용, 유명인 또는 특정 일반인을 사칭하는 경우 이용에 대한 차단 제재를 받을 수 있습니다. 차단될 경우, 일주일간 댓글을 달수 없게 됩니다.

명예훼손, 개인정보 유출, 욕설 등 법률에 위반되는 댓글은 관계 법령에 의거 민형사상 처벌을 받을 수 있으니 이용에 주의를 부탁드립니다.

Close

x